As we heard from Adobe’s Dennis Radeke at the recent Dalet/Adobe event in New York, change in the video industry is never evolutionary: it’s always revolutionary. Dennis pointed out that just as we’re getting comfortable with HD, all of a sudden we need to do it in ultraHD!

Today we’re experiencing an explosion of content, largely created by a corresponding explosion in HD content capture devices, backed up by today’s super connected world.

Against this background, Adobe is striving to achieve and maintain a market leadership position and to enable this, it requires strong relationships with like-minded companies who possess complimentary technologies and platforms to their own. The purpose of the well-attended New York event was to spotlight the relationship between Dalet and Adobe, illustrating how customers can combine the two companies’ offerings to evolve to a more interactive and collaborative model compared to traditional file-based workflows approaches.

In his presentation, Dennis explained how Adobe’s evolution of new and improved feature sets and enabling new workflows within media enterprise operations is central to maintaining their leadership position. In creating Adobe Premiere CC2014, the company has effectively re-written the rulebook in file-based workflows, and this platform is enjoying tremendous success with users and media operations worldwide.

However, Dennis was swift to recognize that no company offers a complete solution, from cradle to grave. He recognized that how users get into and out of the Adobe section of the production workflow is key to their success. To this end, Adobe has invested heavily in an open architecture approach called Content Panels, which provides the doorway into Adobe Premiere.

Some time ago at Dalet, we recognized the potential that Content Panels offer to create a new breed of supercharged production workflows and interactions. That combination of Adobe Premiere and Dalet’s Galaxy MAM platform is a prime example of the superior extended workflows that users can create with a single integrated user interface.

Within his presentation, Dennis explained how Adobe is moving into the area of collaborative editing. In Adobe Anywhere, the company has developed an enterprise class editing platform that is generating a lot of interest from the post community. This platform, combined with a MAM system to sit alongside, is the ideal solution for organizations wanting more exposure, collaboration and oversight over their rich content.

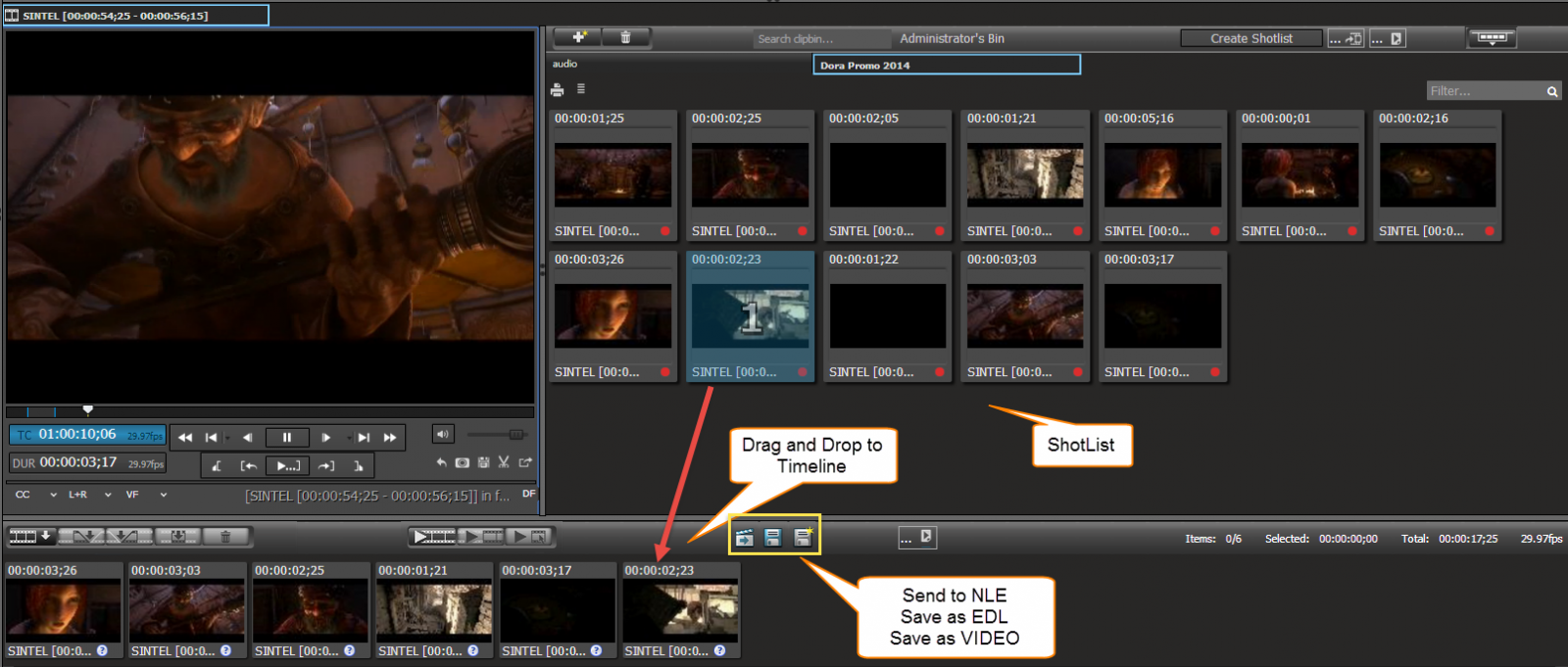

So, how does the Dalet Adobe Premiere plug-in work

At Dalet, we have developed an intuitive Adobe Premiere plug-in, which enables users to employ our well-established browse, preview (in High-Res or Proxy resolution) and search facilities within the Premiere user interface.

Loading an asset to this interface requires no media movement and editing is achieved in place and collaboratively with other editors, even while recording is in progress. Importantly, Dalet MAM asset and logging metadata flows through the editing process in order to provide the editor with relevant metadata from producers such as editorial notes or from automated systems such as closed captions or QC.

With Dalet WebSpace tools, this editorial collaboration and contribution from other team members can be achieved concurrently with the editors. Our Dalet web-based Storyboarder provides any user with a simple and intuitive means of quickly and accurately gathering shots in either private or shared bins accessible to both Galaxy and Premiere users.

This approach can be used for anything, from simple clipping, accessible by all, to full-fledged storyboarding, where you can assemble and review a sequence prior to sharing sequence with craft editors for more complex editing or saving that storyboard as an asset to be reused by all later.

This means that within Adobe Premiere, all Dalet MAM audio, video, clips and EDLs assets can be open in place together with all the parent asset’s metadata flowing through. Finished Premiere projects or sequences can be saved as Dalet assets as well, thus making them accessible to all authorized users for reuse, concurrently. And finally, Premiere conformed projects inherit all of the parent’s assets metadata used in the creation of this project, such as editorial notes, captions, rights etc, which in turn flows through to the finished piece in the MAM catalog. So for example, you don’t need to re-caption the promo if the sources you used had captions.

Finally with the advent of Adobe Anywhere, the same Dalet Plugin will soon be able to maintain the same user experience while connected to the Dalet MAM catalog and working with content, but instead, this content is served to the editor, say in the field, via Anywhere streaming and rendering platform.

At Dalet, we are really excited about the potential that this collaborative development project offers to broadcasters and facilities of all sizes. It illustrates what can be produced when like-minded companies come together with a shared vision and determination.